"I Do Not Know How I May Appear To The World, But To Myself I Seem To Have Been Only Like A Boy, Playing

"I do not know how I may appear to the world, but to myself I seem to have been only like a boy, playing on the sea-shore, and diverting myself, in now and then finding a smoother pebble or prettier shell than ordinary, whilst the great ocean of truth lay all undiscovered before me."

-Isaac Newton-

More Posts from Science-child and Others

New results from our Juno mission suggest the planet is home to “shallow lightning.” An unexpected form of electrical discharge, shallow lightning comes from a unique ammonia-water solution.

It was previously thought that lightning on Jupiter was similar to Earth, forming only in thunderstorms where water exists in all its phases – ice, liquid, and gas. But flashes observed at altitudes too cold for pure liquid water to exist told a different story. This illustration uses data obtained by the mission to show what these high-altitude electrical storms look like.

Understanding the inner workings of Jupiter allows us to develop theories about atmospheres on other planets and exoplanets!

Illustration Credit: NASA/JPL-Caltech/SwRI/MSSS/Gerald Eichstädt/Heidi N. Becker/Koji Kuramura

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com

From Seed to Market: How NASA brings food to the table

Did you know we help farmers grow some of your favorite fruits, veggies and grains?

Our Earth-observing satellites track rainfall amounts, soil moisture, crop health, and more. On the ground, we partner with agencies and organizations around the world to help farmers use that data to care for their fields.

Here are a few ways we help put food on the table, from planting to harvest.

Planting

Did you plant seeds in science class to watch them sprout and grow? They all needed water, right? Our data helps farmers “see” how moist the soil is across large fields.

“When you’re not sure when to water your flowers or your garden, you can look at the soil or touch it with your hands. We are sort of ‘feeling’ the soil, sensing how much water is in the soil – from a satellite,

685 kilometers (408 miles) above Earth,” said John Bolten, the associate program manager of water resources for NASA’s Applied Sciences Program.

This spring, we worked with the U.S. Department of Agriculture and George Mason University to release Crop-CASMA, a tool that shows soil moisture and vegetation conditions for the United States. Able to see smaller areas – about the size of a couple of golf courses – the USDA uses Crop-CASMA to help update farmers on their state’s soil moisture, crop health and growing progress.

Growing

It’s dangerous being a seedling.

Heavy spring rains or summer storms can flood fields and drown growing plants. Dry spells and droughts can starve them of nutrients. Insects and hail can damage them. Farmers need to keep a close eye on plants during the spring and summer months. Our data and programs help them do that.

For example, in California, irrigation is essential for agriculture. California’s Central Valley annually produces more than 250 types of crops and is one of the most productive agricultural regions in the country – but it’s dry. Some parts only get 6 inches of rain per year.

To help, Landsat data powers CropManage – an app that tells farmers how long to irrigate their fields, based on soil conditions and evapotranspiration, or how much water plants are releasing into the atmosphere. The warmer and drier the atmosphere, the more plants “sweat” and lose water that needs to be replenished. Knowing how long to irrigate helps farmers conserve water and be more efficient. In years like 2021, intense droughts can make water management especially critical.

Harvest

Leading up to harvest, farmers need to know their expected yields – and profits.

GEOGLAM, or the Group on Earth Observations Global Agricultural Monitoring Initiative, is a partnership between NASA Harvest, USDA’s Foreign Agricultural Service (FAS) and other global agencies to track and report on crop conditions around the world.

USDA FAS is one of the main users of a soil moisture measurement product developed by Bolten and his team at our NASA Goddard Space Flight Center to drive their crop forecasting system.

If you’re interested in more ways we support agriculture, stay tuned over the next few weeks to learn more about how satellites (and scientists) help put snacks on your table!

Make sure to follow us on Tumblr for your regular dose of space!

“If you read the history of the development of chemistry and particularly of physics, you will see that even such exact natural sciences could not, and still cannot, avoid basing their thought systems on certain hypotheses. In classical physics, up to the end of the 18th century, one of the working hypotheses, arrived at either unconsciously or half-consciously, was that space had three dimensions, an idea which was never questioned. The fact was always accepted, and perspective drawings of physical events, diagrams, or experiments, were always in accordance with that theory. Only when this theory is abandoned does one wonder how such a thing could ever have been believed. How did one come by such an idea? Why were we so caught that nobody ever doubted or even discussed the matter? It was accepted as a self-evident fact, but what was at the root of it? Johannes Kepler, one of the fathers of modern or classic physics, said that naturally space must have three dimensions because of the Trinity! So our readiness to believe that space has three dimensions is a more recent offspring of the Christian trinitarian idea.”

— Marie-Louise von Franz, Alchemy: An Introduction to the Symbolism and the Psychology

Q: Is your mind organized?

A: yes and no. Quantum mechanical order, so to speak.

#laughsinquantummechanicalsuperpositionwhilecrying

Taking Solar Science to New Heights

We’re on the verge of launching a new spacecraft to the Sun to take the first-ever images of the Sun’s north and south poles!

Credit: ESA/ATG medialab

Solar Orbiter is a collaboration between the European Space Agency (ESA) and NASA. After it launches — as soon as Feb. 9 — it will use Earth’s and Venus’s gravity to swing itself out of the ecliptic plane — the swath of space, roughly aligned with the Sun’s equator, where all the planets orbit. From there, Solar Orbiter’s bird’s eye view will give it the first-ever look at the Sun’s poles.

Credit: ESA/ATG medialab

The Sun plays a central role in shaping space around us. Its massive magnetic field stretches far beyond Pluto, paving a superhighway for charged solar particles known as the solar wind. When bursts of solar wind hit Earth, they can spark space weather storms that interfere with our GPS and communications satellites — at their worst, they can even threaten astronauts.

To prepare for potential solar storms, scientists monitor the Sun’s magnetic field. But from our perspective near Earth and from other satellites roughly aligned with Earth’s orbit, we can only see a sidelong view of the Sun’s poles. It’s a bit like trying to study Mount Everest’s summit from the base of the mountain.

Solar Orbiter will study the Sun’s magnetic field at the poles using a combination of in situ instruments — which study the environment right around the spacecraft — and cameras that look at the Sun, its atmosphere and outflowing material in different types of light. Scientists hope this new view will help us understand not only the Sun’s day-to-day activity, but also its roughly 11-year activity cycles, thought to be tied to large-scales changes in the Sun’s magnetic field.

Solar Orbiter will fly within the orbit of Mercury — closer to our star than any Sun-facing cameras have ever gone — so the spacecraft relies on cutting-edge technology to beat the heat.

Credit: ESA/ATG medialab

Solar Orbiter has a custom-designed titanium heat shield with a calcium phosphate coating that withstands temperatures more than 900 degrees Fahrenheit — 13 times the solar heating that spacecraft face in Earth orbit. Five of the cameras look at the Sun through peepholes in that heat shield; one observes the solar wind out the side.

Over the mission’s seven-year lifetime, Solar Orbiter will reach an inclination of 24 degrees above the Sun’s equator, increasing to 33 degrees with an additional three years of extended mission operations. At closest approach the spacecraft will pass within 26 million miles of the Sun.

Solar Orbiter will be our second major mission to the inner solar system in recent years, following on August 2018’s launch of Parker Solar Probe. Parker has completed four close solar passes and will fly within 4 million miles of the Sun at closest approach.

Solar Orbiter (green) and Parker Solar Probe (blue) will study the Sun in tandem.

The two spacecraft will work together: As Parker samples solar particles up close, Solar Orbiter will capture imagery from farther away, contextualizing the observations. The two spacecraft will also occasionally align to measure the same magnetic field lines or streams of solar wind at different times.

Watch the launch

The booster of a United Launch Alliance Atlas V rocket that will launch the Solar Orbiter spacecraft is lifted into the vertical position at the Vertical Integration Facility near Space Launch Complex 41 at Cape Canaveral Air Force Station in Florida on Jan. 6, 2020. Credit: NASA/Ben Smegelsky

Solar Orbiter is scheduled to launch on Feb. 9, 2020, during a two-hour window that opens at 11:03 p.m. EST. The spacecraft will launch on a United Launch Alliance Atlas V 411 rocket from Space Launch Complex 41 at Cape Canaveral Air Force Station in Florida.

Launch coverage begins at 10:30 p.m. EST on Feb. 9 at nasa.gov/live. Stay up to date with mission at nasa.gov/solarorbiter!

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com

Got a question about black holes? Let’s get to the bottom of these odd phenomena. Ask our black hole expert anything!

Black holes are mystifying yet terrifying cosmic phenomena. Unfortunately, people have a lot of ideas about them that are more science fiction than science. Don’t worry! Our black hole expert, Jeremy Schnittman, will be answering your your questions in an Answer Time session on Wednesday, October 2 from 3pm - 4 pm ET here on NASA’s Tumblr! Make sure to ask your question now by visiting http://nasa.tumblr.com/ask!

Jeremy joined the Astrophysics Science Division at our Goddard Space Flight Center in 2010 following postdoctoral fellowships at the University of Maryland and Johns Hopkins University. His research interests include theoretical and computational modeling of black hole accretion flows, X-ray polarimetry, black hole binaries, gravitational wave sources, gravitational microlensing, dark matter annihilation, planetary dynamics, resonance dynamics and exoplanet atmospheres. He has been described as a “general-purpose astrophysics theorist,” which he regards as quite a compliment.

Fun Fact: The computer code Jeremy used to make the black hole animations we featured last week is called “Pandurata,” after a species of black orchid from Sumatra. The name pays homage to the laser fusion lab at the University of Rochester where Jeremy worked as a high school student and wrote his first computer code, “Buttercup.” All the simulation codes at the lab are named after flowers.

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com

Tomorrow’s Technology on the Space Station Today

Tablets, smart appliances, and other technologies that are an indispensable part of daily life are no longer state-of-the-art compared to the research and technology development going on over our heads. As we celebrate 20 years of humans continuously living and working in space aboard the International Space Station, we’re recapping some of the out-of-this-world tech development and research being done on the orbiting lab too.

Our Space Technology Mission Directorate (STMD) helps redefine state-of-the-art tech for living and working in space. Here are 10 technologies tried and tested on the space station with helping hands from its astronaut occupants over the years.

1. Astronaut Wanna-Bees

Astronauts on the space station are responsible for everything from conducting science experiments and deploying satellites to tracking inventory and cleaning. While all are necessary, the crew can delegate some jobs to the newest robotic inhabitants – Astrobees.

These cube-shaped robots can work independently or in tandem, carrying out research activities. Once they prove themselves, the bots will take on some of the more time-consuming tasks, such as monitoring the status of dozens of experiments. The three robots – named Bumble, Honey, and Queen – can operate autonomously following a programmed set of instructions or controlled remotely. Each uses cameras for navigation, fans for propulsion, and a rechargeable battery for power. The robots also have a perching arm that lets them grip handrails or hold items. These free-flying helpers take advantage of another STMD technology called Gecko Grippers that “stick” to any surface.

2. Getting a Grip in Microgravity

We wanted to develop tools for grabbing space junk, and something strong and super-sticky is necessary to collect the diverse material orbiting Earth. So, engineers studied the gecko lizard, perhaps the most efficient “grabber” on this planet. Millions of extremely fine hairs on the bottom of their feet make an incredible amount of contact with surfaces so the gecko can hold onto anything. That inspired our engineers to create a similar material.

Now the Gecko Gripper made by OnRobot is sold on the commercial market, supporting industrial activities such as materials handling and assembly. The NASA gecko adhesive gripper that’s being tested in microgravity on the Astrobee robots was fabricated on Earth. But other small plastic parts can now be manufactured in space.

3. Make It, or Don’t Take It

Frequent resupply trips from Earth to the Moon, Mars, and other solar system bodies are simply not realistic. In order to become truly Earth-independent and increase sustainability, we had to come up with ways to manufacture supplies on demand.

A demonstration of the first 3D printer in space was tested on the space station in 2014, proving it worked in microgravity. This paved the way for the first commercial 3D printer in space, which is operated by Made In Space. It has successfully produced more than 150 parts since its activation in 2016. Designs for tools, parts, and many other objects are transmitted to the station by the company, which also oversees the print jobs. Different kinds of plastic filaments use heat and pressure in a process that’s similar to the way a hot glue gun works. The molten material is precisely deposited using a back-and-forth motion until the part forms. The next logical step for efficient 3D printing was using recycled plastics to create needed objects.

4. The Nine Lives of Plastic

To help fragile technology survive launch and keep food safe for consumption, NASA employs a lot of single-use plastics. That material is a valuable resource, so we are developing a number of ways to repurpose it. The Refabricator, delivered to the station in 2018, is designed to reuse everything from plastic bags to packing foam. The waste plastic is super-heated and transformed into the feedstock for its built-in 3D printer. The filament can be used repeatedly: a 3D-printed wrench that’s no longer needed can be dropped into the machine and used to make any one of the pre-programmed objects, such as a spoon. The dorm-fridge-sized machine created by Tethers Unlimited Inc. successfully manufactured its first object, but the technology experienced some issues in the bonding process likely due to microgravity’s effect on the materials. Thus, the Refabricator continues to undergo additional testing to perfect its performance.

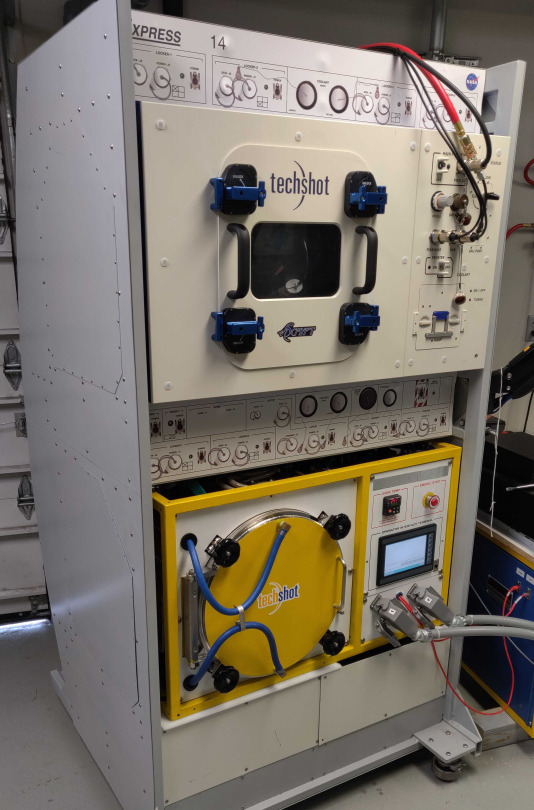

5. Speed Metal

An upcoming hardware test on the station will try out a new kind of 3D printer. The on-demand digital manufacturing technology is capable of using different kinds of materials, including plastic and metals, to create new parts. We commissioned TechShot Inc. to build the hardware to fabricate objects made from aerospace-grade metals and electronics. On Earth, FabLab has already demonstrated its ability to manufacture strong, complex metal tools and other items. The unit includes a metal additive manufacturing process, furnace, and endmill for post-processing. It also has built-in monitoring for in-process inspection. When the FabLab is installed on the space station, it will be remotely operated by controllers on Earth. Right now, another printer created by the same company is doing a different kind of 3D printing on station.

6. A Doctor’s BFF

Today scientists are also learning to 3D print living tissues. However, the force of gravity on this planet makes it hard to print cells that maintain their shape. So on Earth, scientists use scaffolding to help keep the printed structures from collapsing.

The 3D BioFabrication Facility (BFF) created by TechShot Inc. could provide researchers a gamechanger that sidesteps the need to use scaffolds by bioprinting in microgravity. This first American bioprinter in space uses bio-inks that contain adult human cells along with a cell-culturing system to strengthen the tissue over time. Eventually, that means that these manufactured tissues will keep their shape once returned to Earth’s gravity! While the road to bioprinting human organs is likely still many years away, these efforts on the space station may move us closer to that much-needed capability for the more than 100,000 people on the wait list for organ transplant.

7. Growing Vitamins

Conditions in space are hard on the human body, and they also can be punishing on food. Regular deliveries of food to the space station refresh the supply of nutritious meals for astronauts. But prepackaged food stored on the Moon or sent to Mars in advance of astronauts could lose some nutritional value over time.

That’s why the BioNutrients experiment is underway. Two different strains of baker’s yeast which are engineered to produce essential nutrients on demand are being checked for shelf life in orbit. Samples of the yeast are being stored at room temperature aboard the space station and then are activated at different intervals, frozen, and returned to Earth for evaluation. These tests will allow scientists to check how long their specially-engineered microbes can be stored on the shelf, while still supplying fresh nutrients that humans need to stay healthy in space. Such microbes must be able to be stored for months, even years, to support the longer durations of exploration missions. If successful, these space-adapted organisms could also be engineered for the potential production of medicines. Similar organisms used in this system could provide fresh foods like yogurt or kefir on demand. Although designed for space, this system also could help provide nutrition for people in remote areas of our planet.

8. Rough and Ready

Everything from paints and container seals to switches and thermal protection systems must withstand the punishing environment of space. Atomic oxygen, charged-particle radiation, collisions with meteoroids and space debris, and temperature extremes (all combined with the vacuum) are just some conditions that are only found in space. Not all of these can be replicated on Earth. In 2001, we addressed this testing problem with the Materials International Space Station Experiment (MISSE). Technologists can send small samples of just about any technology or material into low-Earth orbit for six months or more. Mounted to the exterior of the space station, MISSE has tested more than 4,000 materials. More sophisticated hardware developed over time now supports automatic monitoring that sends photos and data back to researchers on Earth. Renamed the MISSE Flight Facility, this permanent external platform is now owned and operated by the small business, Alpha Space Test & Research Alliance LLC. The woman-owned company is developing two similar platforms for testing materials and technologies on the lunar surface.

9. Parachuting to Earth

Small satellites could provide a cheaper, faster way to deliver small payloads to Earth from the space station. To do just that, the Technology Education Satellite, or TechEdSat, develops the essential technologies with a series of CubeSats built by college students in partnership with NASA. In 2017, TechEdSat-6 deployed from the station, equipped with a custom-built parachute called exo-brake to see if a controlled de-orbit was possible. After popping out of the back of the CubeSat, struts and flexible cords warped the parachute like a wing to control the direction in which it travelled. The exo-brake uses atmospheric drag to steer a small satellite toward a designated landing site. The most recent mission in the series, TechEdSat-10, was deployed from the station in July with an improved version of an exo-brake. The CubeSat is actively being navigated to the target entry point in the vicinity of the NASA’s Wallops Flight Facility on Wallops Island, Virginia.

10. X-ray Vision for a Galactic Position System

Independent navigation for spacecraft in deep space is challenging because objects move rapidly and the distances between are measured in millions of miles, not the mere thousands of miles we’re used to on Earth. From a mission perched on the outside of the station, we were able to prove that X-rays from pulsars could be helpful. A number of spinning neutron stars consistently emit pulsating beams of X-rays, like the rotating beacon of a lighthouse. Because the rapid pulsations of light are extremely regular, they can provide the precise timing required to measure distances.

The Station Explorer for X-Ray Timing and Navigation (SEXTANT) demonstration conducted on the space station in 2017 successfully measured pulsar data and used navigation algorithms to locate the station as it moved in its orbit. The washing machine-sized hardware, which also produced new neutron star science via the Neutron star Interior Composition Explorer (NICER), can now be miniaturized to develop detectors and other hardware to make pulsar-based navigation available for use on future spacecraft.

As NASA continues to identify challenges and problems for upcoming deep space missions such as Artemis, human on Mars, and exploring distant moons such as Titan, STMD will continue to further technology development on the space station and Earth.

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com

Celebrating Spitzer, One of NASA’s Great Observatories

As the Spitzer Space Telescope’s 16-year mission ends, we’re celebrating the legacy of our infrared explorer. It was one of four Great Observatories – powerful telescopes also including Hubble, Chandra and Compton – designed to observe the cosmos in different parts of the electromagnetic spectrum.

Light our eyes can see

The part of the spectrum we can see is called, predictably, visible light. But that’s just a small segment of all the wavelengths of the spectrum. The Hubble Space Telescope observes primarily in the visible spectrum. Our Chandra X-ray Observatory is designed to detect (you guessed it) X-ray emissions from very hot regions of the universe, like exploded stars and matter around black holes. Our Compton Gamma Ray Observatory, retired in 2000, produced the first all-sky survey in gamma rays, the most energetic and penetrating form of light.

Then there’s infrared…

Infrared radiation, or infrared light, is another type of energy that we can’t see but can feel as heat. All objects in the universe emit some level of infrared radiation, whether they’re hot or cold. Spitzer used its infrared instrument to make discoveries in our solar system (including Saturn’s largest ring) all the way to the edge of the universe. From stars being born to planets beyond our solar system (like the seven Earth-size exoplanets around the star TRAPPIST-1), Spitzer’s science discoveries will continue to inspire the world for years to come.

Multiple wavelengths

Together, the work of the Great Observatories gave us a more complete view and understanding of our universe.

Hubble and Chandra will continue exploring our universe, and next year they’ll be joined by an even more powerful observatory … the James Webb Space Telescope!

Many of Spitzer’s breakthroughs will be studied more precisely with the Webb Space Telescope. Like Spitzer, Webb is specialized for infrared light. But with its giant gold-coated beryllium mirror and nine new technologies, Webb is about 1,000 times more powerful. The forthcoming telescope will be able to push Spitzer’s science findings to new frontiers, from identifying chemicals in exoplanet atmospheres to locating some of the first galaxies to form after the Big Bang.

We can’t wait for another explorer to join our space telescope superteam!

Make sure to follow us on Tumblr for your regular dose of space: http://nasa.tumblr.com

What kind of math is needed to get to Mars? How is the path of the lander calculated?

-

kingofbattletoasters liked this · 5 years ago

kingofbattletoasters liked this · 5 years ago -

drunk-on-safety liked this · 5 years ago

drunk-on-safety liked this · 5 years ago -

watch-out-idiot-passing-through reblogged this · 5 years ago

watch-out-idiot-passing-through reblogged this · 5 years ago -

watch-out-idiot-passing-through liked this · 5 years ago

watch-out-idiot-passing-through liked this · 5 years ago -

xbababunnyx liked this · 5 years ago

xbababunnyx liked this · 5 years ago -

science-child reblogged this · 5 years ago

science-child reblogged this · 5 years ago